Introduction to Database Performance Optimization

Most modern web applications handle a lot of data. There are multiple types of databases that handle data in different ways, but share similarities between them. For web applications, database performance directly impacts user experience. Poor database performance can lead to slow response times, frustrated users, and lost business opportunities.

In any case, a performant database is crucial for ensuring that web applications run smoothly and efficiently. In this blog post, we’ll explore various strategies and best practices to optimize database performance, helping you maximize speed and efficiency.

Database performance optimization involves improving the speed and efficiency of database queries, reducing latency, and increasing throughput. By optimizing your database, you ensure faster query execution times and better overall application performance.

Understanding Key Database Performance Metrics

To effectively optimize your database, it’s important to understand key performance metrics. These metrics help you identify bottlenecks and measure the impact of optimization efforts.

Query execution time measures how long it takes for a database to process a query. Faster execution times mean better performance. Long query execution times can slow down your application and frustrate users.

Database Throughput refers to the number of transactions a database can handle in a given time period. Higher throughput indicates a more efficient database. Optimizing throughput ensures that your database can handle a high volume of transactions without slowing down.

Latency is the time it takes for a query to travel from the application to the database and return the data back to the application.. Lower latency results in quicker response times. Reducing latency improves user experience by providing faster access to data.

Best Practices for Database Design

Proper database design is the foundation of optimal performance. By following best practices in schema design, normalization, and choosing the right database type, you can significantly enhance performance.

A well-structured schema can greatly improve database performance. Ensure that your tables are designed to minimize redundancy and that relationships between tables are clearly defined.

Normalization involves organizing data to reduce redundancy, while denormalization combines tables to improve read performance. Each approach has its pros and cons. Normalization ensures data integrity, but can slow down read operations. Denormalization improves read performance, but can lead to data redundancy.

The choice between SQL and NoSQL databases depends on your application’s needs. SQL databases are ideal for complex queries and transactions, while NoSQL databases are better for large-scale, distributed data. Understanding the strengths and weaknesses of each can help you choose the right database type for your application.

Strategies for Query Optimization

Efficient queries are key to database performance. By optimizing your SQL queries, using execution plans, and implementing caching techniques, you can significantly improve performance.

- To write efficient SQL queries, avoid using SELECT *; specify only the columns you need. Use JOINs judiciously and prefer WHERE clauses over HAVING clauses for filtering data.

SELECT p.id, p.name

FROM PERSON p

WHERE p.ACTIVE = true;- Use efficient conditions and comparisons in your query. Analyze the business requirements of your implementation and plan the query accordingly. If you use JOIN between several tables, make sure you use an efficient method according to requirements.

SELECT s.NAME as StateName, c.NAME as CityName

FROM City c

LEFT JOIN State s ON c.STATE_ID = s.ID- Avoiding N+1 queries is crucial for optimizing database performance. N+1 queries occur when an application makes an additional query for each row returned by the initial query, leading to a performance bottleneck. By using techniques such as eager loading or join fetching, you can retrieve all necessary data in a single query rather than multiple subsequent queries. This reduces the number of database round-trips, significantly improving performance and reducing load on the database.

- Batch processing involves grouping multiple database operations into a single transaction, reducing the overhead associated with executing each operation individually. This approach is particularly beneficial for insert, update, or delete operations involving large datasets. By processing data in batches, you minimize the number of database connections and context switches required, leading to more efficient use of resources and faster execution times. Batch processing can be implemented using techniques like JDBC batch updates or bulk operations in ORMs such as Hibernate.

- Query execution plans help you understand how the database processes your queries. Use tools like EXPLAIN in MySQL or PostgreSQL to analyze execution plans and identify bottlenecks.

- Implement caching to store frequently accessed data in memory, reducing the need for repetitive queries. Use query caching or result set caching to improve performance.

Indexing Strategies for Improved Performance

Indexes are vital for speeding up query performance. By understanding different types of indexes and best practices for creating and maintaining them, you can improve query execution times.

Different types of indexes serve different purposes. B-tree indexes are common for range queries, bitmap indexes are useful for columns with low cardinality, and hash indexes are efficient for equality searches.

Regularly update and maintain indexes to ensure they remain effective. Drop unused indexes to avoid unnecessary overhead. Index columns that are frequently used in WHERE clauses, JOIN conditions, and ORDER BY clauses.

Indexes allow the database to find rows more quickly and efficiently. For example, without an index, a query might have to scan an entire table, but with an index, it can quickly locate the relevant rows.

Leveraging Caching Mechanisms

Caching can dramatically improve database performance by reducing the load on your database and speeding up data retrieval. Depending on the application needs, there are multiple caching mechanisms/solutions available, and in this blog post I will try to explain all of them to the reader.

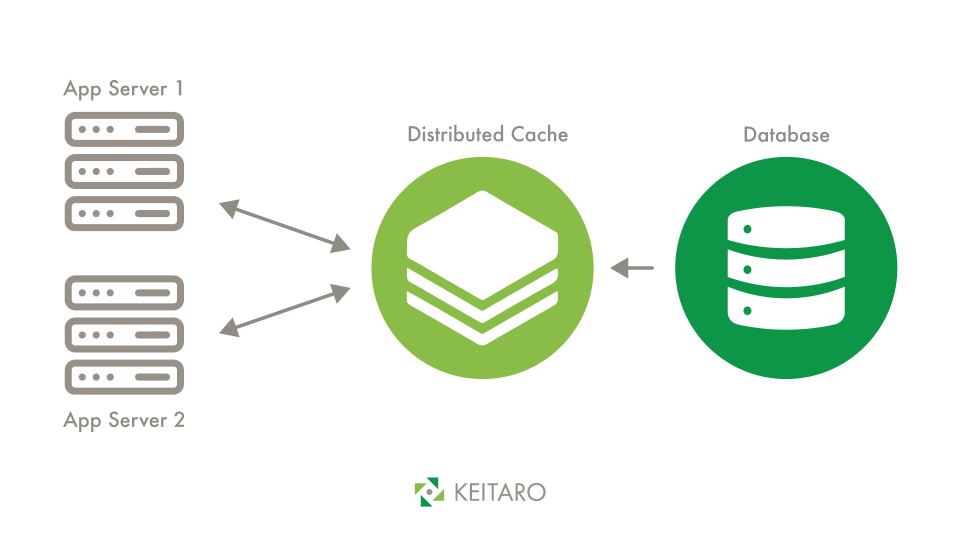

Use in-memory caching systems like Redis or Memcached to store frequently accessed data. This reduces the load on your database and speeds up data retrieval.

Implement database-level caching to store the results of expensive queries. Use tools like MySQL’s query cache or PostgreSQL’s caching mechanisms to improve performance.

While caching can improve performance, it can also lead to stale data. Implement cache invalidation strategies to ensure data consistency. For example, use time-based or event-based invalidation to keep your cache up-to-date.

Database Configuration and Tuning Tips

Fine-tuning your database settings can yield significant performance gains. Adjust database parameters, perform routine maintenance tasks, and use monitoring tools to keep your database running smoothly.

Configure parameters such as buffer pool size, cache size, and connection limits to match your workload. Proper configuration ensures your database operates efficiently.

Perform routine maintenance tasks like vacuuming, analyzing tables, and rebuilding indexes to keep your database running smoothly. These tasks help optimize space usage and improve query performance.

Use tools like pgAdmin, MySQL Workbench, or Oracle Enterprise Manager to monitor and profile your database performance. These tools provide insights into performance metrics and help identify areas for improvement.

Load Balancing and Replication Techniques

Distribute database load and ensure high availability by implementing load balancing and replication techniques.

Implement load balancers to distribute queries across multiple database servers. This helps prevent any single server from becoming a bottleneck, improving overall performance.

Database Replication for High Availability

Set up replication to create multiple copies of your database. This ensures data redundancy and allows for read operations to be spread across replicas, improving performance and reliability.

Use read replicas to handle read-heavy workloads. Shard your database to distribute data across multiple servers, improving write performance and scalability.

Effective Management of Database Connections

Efficient management of database connections is crucial for maintaining performance and stability.

Use connection pooling to reuse database connections instead of opening a new one for each request. This reduces overhead and improves response times.

Configure connection limits and timeouts to prevent resource exhaustion and ensure the stability of your database. Proper configuration helps manage the load on your database servers.

Ensure your application closes connections promptly and avoids long-running transactions. Efficient use of connections reduces resource contention and improves performance.

Monitoring and Troubleshooting Database Performance

Continuous monitoring and proactive troubleshooting are key to maintaining performance and reliability.

Utilize tools like Grafana, Prometheus, and New Relic to monitor database performance metrics and set up alerts for potential issues. These tools provide real-time insights into performance and help identify problems before they impact users.

Regularly analyze slow queries and use profiling tools to identify and resolve performance bottlenecks. Implement fixes such as query optimization, index creation, or configuration changes to improve performance.

Set up automated responses and alerts for common issues. For example, increase cache size when hit rates drop or reallocate resources during peak loads. Automation helps maintain performance without constant manual intervention.

Conclusion

Optimizing database performance is an ongoing process that requires attention to detail and a proactive approach. By understanding key metrics, following best practices in design and querying, and using the right tools and strategies, you can ensure your web application’s database performs at its best. Continuous monitoring and regular maintenance are crucial to sustaining high performance and providing a seamless user experience.

Additional Resources

- Recommended Books and Courses on Database Optimization: “Database System Concepts” by Silberschatz, Korth, and Sudarshan, and online courses on platforms like Coursera, Udemy, and Pluralsight.

- Links to Further Reading and Tutorials: Explore tutorials on database management and optimization from trusted sources like MySQL documentation, PostgreSQL wiki, and Oracle’s official documentation.

- Tools for Database Management and Optimization: Tools such as pgAdmin, MySQL Workbench, Redis, Memcached, Grafana, and Prometheus are invaluable for database administrators and developers aiming to optimize performance.

By implementing these strategies and continuously refining your approach, you can achieve significant improvements in database performance, ensuring your web applications run efficiently and effectively.