To meet the growing demand of technology penetration in almost every walk of life, software and application development needs to be scaled, effective, and swift. To achieve this, DevOps has surfaced as the set of practices which enables application developers to release new software and improve the existing features. As businesses are migrating their architecture and infrastructure to present a data-driven and cloud-native exposure, we can see a surge in the topics of cloud computing, containerization, orchestration solutions, etc. While this race of swift and efficient development goes on, it is hard to ignore names like Docker and Kubernetes, which have revolutionized the way we create, develop, deploy and ship software at scale.

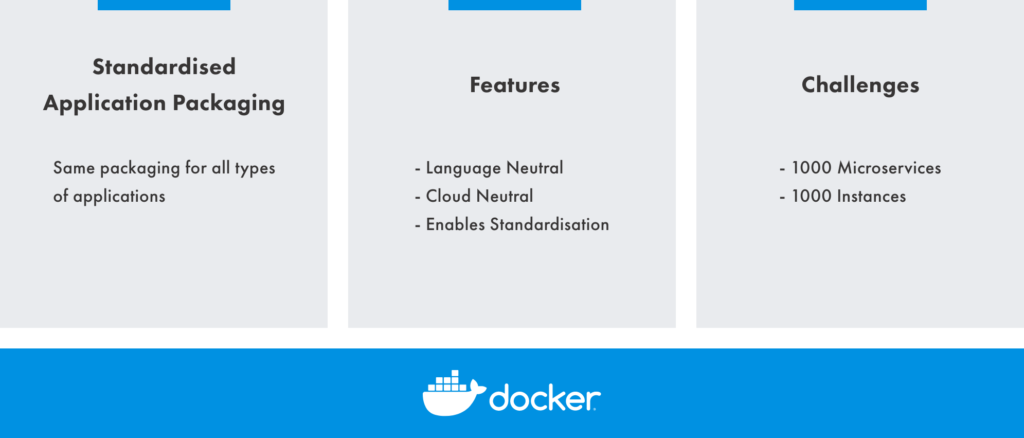

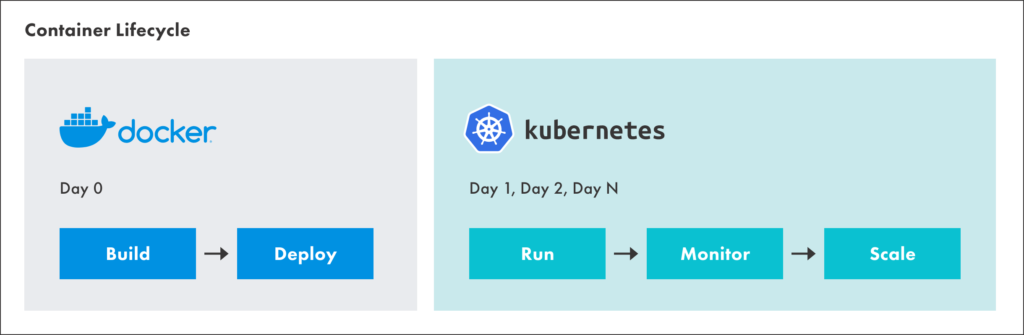

Docker is basically an open-source containerization platform that packages all the applications and dependencies together in a docker container. With Docker, application developers can create and execute containers with just a few commands. On the other hand, Kubernetes is an open-source container orchestration software that automates various manual processes that are used in the deployment, management, and scaling of the containerized applications.

Why Docker & Kubernetes?

To deploy a piece of software on a given infrastructure, you need not only the software itself, but its dependencies like libraries, interpreters, sub packages, compilers, extensions, and so on.

You also need its configuration: settings, site-specific details, license keys, database passwords. Quite often running the software on different machines, by different teams can fire different kinds of issues.

In order to solve them, the tech industry borrowed an idea from the shipping industry: the container. A standard packaging and distribution format that is generic and widespread, enabling greatly increased carrying capacity, lower costs, economies of scale, and ease of handling. The container format contains everything the application needs to run, baked into an image file that can be executed by a container runtime.

Maybe you’ll ask: How is this different from a virtual machine image? That, too, contains everything the application needs to run—but a lot more besides. Because the virtual machine contains lots of unrelated programs, libraries, and things that the application will never use, most of its space is wasted. Transferring VM images across the network is far slower than optimized containers.

Even worse, virtual machines are virtual: the underlying physical CPU effectively implements an emulated CPU, which the virtual machine runs on. The virtualization layer has a dramatic, negative effect on performance: in tests, virtualized workloads run pretty slower than the equivalent containers.

In comparison, containers run directly on the real CPU, with no virtualization overhead, just as ordinary binary executables do.

And because containers only hold the files they need, they’re much smaller than VM images. They also use a clever technique of addressable filesystem layers, which can be shared and reused between containers.

As we can see, not only is the container the unit of deployment and the unit of packaging; it is also the unit of reuse (the same container image can be used as a component of many different services), the unit of scaling, and the unit of resource allocation (a container can run anywhere sufficient resources are available for its own specific needs).

Developers no longer have to worry about maintaining different versions of the software to run on different operating systems, against different library and language versions, and so on. The only thing the container depends on is the operating system kernel (Linux, for example). Simply supply your application in a container image, and it will run on any platform that supports the standard container format and has a compatible kernel.

Based on this, we can conclude that Docker is actually several different, but related things: a container image format (imagine it as a snapshot of the application and its dependencies), a container runtime library, which manages the life cycle of containers, a command-line tool for packaging and running containers, and an API for container management. It’s everything you need to “Build, Ship, and Run applications, Anywhere.”

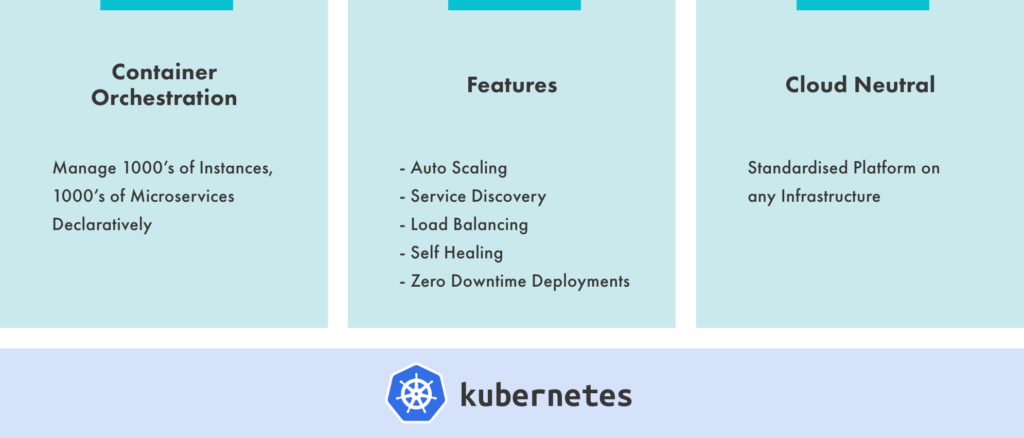

In cases where the application is broken down into multiple microservices instead of being monolith, each one of them with their own lifecycle and operational needs, Kubernetes comes into play. Kubernetes is not used to create the application containers; it actually needs a container platform to run, Docker being the most popular one. Containers created with Docker can be managed by Kubernetes, which handles the main container management responsibilities including container deployment, scaling, healing, and load balancing of containers.

Kubernetes does the things that the very best system administrator would do: automation, failover, centralized logging, monitoring. It takes what we’ve learned in the DevOps community and makes it the default, out of the box.

—Kelsey Hightower (staff developer advocate at Google)

Kubernetes makes deployment easy because the time and effort it takes to deploy are greatly reduced.

Zero-downtime deployments are common because Kubernetes does rolling updates by default (starting containers with the new version, waiting until they become healthy, and then shutting down the old ones).

Kubernetes also provides facilities to help you implement continuous deployment practices such as canary deployments: gradually rolling out updates one server at a time to catch problems early. Another common practice is blue-green deployments: spinning up a new version of the system in parallel, and switching traffic over to it once it’s fully up and running.

Demand spikes will no longer take down your service because Kubernetes supports autoscaling. For example, if CPU utilization by a container reaches a certain level, Kubernetes can keep adding new replicas of the container until the utilization falls below the threshold. When demand falls, Kubernetes will scale down the replicas again, freeing up cluster capacity to run other workloads.

Because Kubernetes has redundancy and failover built-in, your application will be more reliable and resilient.

The business will love Kubernetes too because it cuts infrastructure costs and makes much better use of a given set of resources. Traditional servers, even cloud servers, are mostly idle most of the time. The excess capacity that you need to handle demand spikes is essentially wasted under normal conditions.

Kubernetes takes that wasted capacity and uses it to run workloads, so you can achieve much higher utilization of your machines—and you get scaling, load balancing, and failover for free too.

Simply said, Kubernetes changed the way applications are developed and operated. It’s a core component in the DevOps world today. You can use Kubernetes on any major cloud provider, on bare-metal on-premises environments, as well as on a local developer’s machine. It is provider-agnostic: once you’ve defined the resources you use, you can run them on any Kubernetes cluster, regardless of the underlying cloud provider.

Stability, flexibility, a powerful API, open code, and an open developer community are some of the reasons why Kubernetes became an industry standard and why it is one of the flagship technologies in the Cloud Native Computing Foundation’s Landscape today.

Based on all of the above, there are great benefits of using Kubernetes with Docker:

- Automated numerous manual processes

- Easier application maintenance as the applications are broken down into smaller parts and containerized

- Containers run on an infrastructure that is more robust and the applications are more highly available

- Container balancing by placing the containers at the best spot

- Applications are able to handle more load on-demand by containers being replicated, improving user experience and reducing resource waste

- Automated rollbacks and rollouts

Conclusion

Docker and Kubernetes are futuristic platforms that are going to remain part of the development sector for many years to come. With the rich features and convenience, Docker and Kubernetes deserve a fair chance to be deployed in the DevOps practices. Whether it is application modularity or stern integration with the cloud platforms, this multi-dimensional combination presents a very sound case for their use in DevOps practices. Though both platforms aren’t the same, their combination delivers optimum results along with improvising the deployment of containers in a distributed architecture.

Till next time, folks. Have fun and don’t ever be afraid to experiment 🙂